Making medical images make sense

For many people, the rise of artificial intelligence-generated images has sparked anxiety — about misinformation, deepfakes and the blurring line between what’s real and what’s not. But in the world of medical imaging, realism isn’t the problem — it’s the goal.

When it comes to using AI to assist in disease diagnosis, sharpen noisy scans or reconstruct entire images from limited data, clinicians must be confident that the technology they rely on is producing detailed and accurate results.

That question of accuracy — how closely synthetic images mirror their real counterparts — is what William & Mary Associate Professor of Mathematics GuanNan Wang set out to answer. Along with researchers from Yale University, the University of Virginia and George Mason University, she recently co-authored a paper in the Journal of the American Statistical Association, one of the most highly regarded journals in statistical science, evaluating the fidelity of AI-generated medical images.

The team developed a novel statistical inference tool to rigorously identify differences between synthetic and real medical images. Their analysis revealed systematic gaps, and to address them, they designed and tested a new mathematical transformation that brings AI-generated images into much closer alignment with authentic scans — a step toward the safe and reliable use of synthetic medical data in clinical settings.

“Generative AI opens up exciting opportunities to revolutionize the medical field,” said Wang. “But researchers need to prove, through careful and rigorous evaluation, that healthcare providers can trust these new technologies before they’re used to guide decisions about real patients.”

Reimagining medical imaging

Data scarcity is a major challenge in applying AI to healthcare — one Wang has experienced firsthand. For more than a decade, she’s studied the progression of Alzheimer’s disease by examining patients’ brain scans, genetic profiles and demographic data in search of clues as to what drives disease progression. Yet many patient records are incomplete, often missing MRI images, which makes it difficult to connect these data sources. Using generative AI, Wang hopes to fill in those missing pieces.

“By training an AI algorithm on the patients who have brain scans and at least one more piece of data — whether demographic or genetic — we can create a model that predicts what the brain scans might look like for patients who lack the imaging component,” said Wang. “Those synthetic images can then help augment our existing datasets, giving us a better chance to uncover the relationships between patient characteristics and disease progression.”

Guidelines protecting patient privacy make it difficult for hospitals and researchers to share medical images. The cost and time associated with having medical experts take and annotate these images are other challenges contributing to data scarcity.

These problems are compounded when trying to develop a diagnostic algorithm for a rare disease, when even fewer scans exist, or when trying to characterize images associated with certain underrepresented demographics, such as pediatric cases.

“Synthetic images can help address the challenge of data scarcity by generating large numbers of new medical images,” said Wang. “Because these images are not linked to any individual patient, they can also reduce privacy concerns.

Researchers have developed a number of methods to create synthetic images. One example of a widely known approach is the generative adversarial network (GAN), where two AI networks compete — one generates images while the other tries to detect the fakes — until the synthetic scans become nearly indistinguishable from real ones.

But before clinicians start relying on these synthetic images, they need to know how accurate they are, a question Wang set out to answer.

“Even though we can generate synthetic images, are they useful? Can we trust them?” she asked. “They may look like real images, but statistically or mathematically they might not align with the real ones.”

In the world of medicine, where the consequences of making decisions based on faulty data can be catastrophic, rigorous evaluation methods are needed to interrogate these questions.

Seeing the forest and the trees

Most existing statistical strategies for comparing synthetic and real images rely on a voxel-by-voxel (a voxel is a 3D pixel) analysis. But comparing hundreds of complex images with thousands to millions of voxels each quickly becomes a statistical nightmare, and accuracy pays the price. Additionally, looking at images voxel-wise divorces them from the complex spatial geometry of organs like the brain. Think about being sent an image pixel by pixel and then being asked what the image depicted.

Other research areas, such as machine learning and computer vision, have developed more holistic measures, including Fréchet Inception Distance, Kullback-Leibler divergence and total variation distance, to capture the global distributional.

“These comparisons typically rely on global metrics — that is, they compare overall differences between AI-generated and real images,” Wang said. “But in healthcare, clinically important differences often appear only in small subregions, such as subtle changes between normal and diseased tissue. It’s precisely these minute variations that evaluation methods need to detect.”

To create their synthetic images, Wang and her colleagues first collected functional MRI (fMRI) brain scans from patients who were asked to tap their fingers at specific intervals. They then trained an AI tool called a Denoising Diffusion Probabilistic Model (DDPM) by gradually adding random noise to the brain scans until the images dissolved into pure static.

Observing this process, their DDPM learned how to reverse it — starting from noise and reconstructing brain scans that resembled the originals. Think of it like a digital windshield wiper, turning a blurry piece of glass into a clear picture.

They then used a method called Functional Data Analysis (FDA), which treats each image as a continuous function. Using this framework, they constructed simultaneous confidence regions, statistical inferences that capture uncertainty across the whole brain domain, to compare the real and synthetic images. To account for the complex geometry of the brain scans, they projected the brains onto a sphere, which allowed for an easier one-to-one comparison of different brain regions.

“Generative AI can create images, but it is statistics that gives those images a scientific backbone. Without us, it’s art; with us, it becomes knowledge.”

GuanNan Wang

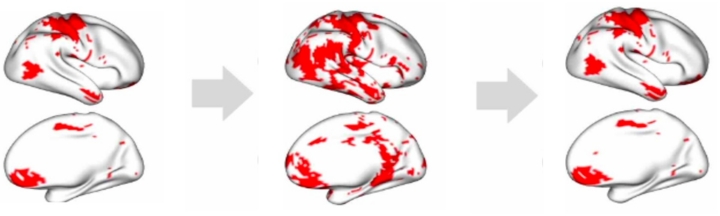

Using these techniques, the researchers analyzed all the images to find the mean —what did the average of all the synthetic images look like compared to the average of all the real images — and the covariance — which measures how changes in one voxel relate to changes in others across space.

They quickly found some discrepancies between their synthetic data and the real images.

“We saw areas of the brain lighting up that shouldn’t have been, showing us that our AI-generated images weren’t fully mirroring the original data,” said Wang.

To remedy that, the scientists, again using FDA, came up with a novel transformation to make the synthetic images much more closely aligned with the real images.

“Our work underscores the importance of establishing rigorous evaluation techniques that don’t just rely on global similarity, but look at the minute details of these images,” said Wang. “We hope this work is one additional step toward making AI-generated images more applicable and trustworthy in the medical field.”

Wrapping up a presentation in August at the Eighth International Conference on Econometrics and Statistics, Wang illustrated the importance of such evaluation methods, “Generative AI can create images, but it is statistics that gives those images a scientific backbone. Without us, it’s art; with us, it becomes knowledge.”

Latest W&M News

- Journalist, author David Sanger selected as W&M’s Hunter B. Andrews FellowAward-winning journalist and author David E. Sanger will visit William & Mary in November as the university’s 2025 Hunter B. Andrews Fellow.

- ‘Responsible stewardship’: How William & Mary shapes conservation around the globePresidential Conversation celebrates the Year of the Environment.

- The summer of cyber: W&M students gain real-world experience in cybersecurityWilliam & Mary students had a number of opportunities this summer to make contributions to the nation's cyber capabilities.

- Coping with a painful childhoodInternational study shows college students who experienced family dysfunction as children sometimes turn to alcohol to cope with the long-term impacts.

- W&M’s Center for Gifted Education expands pre-collegiate summer campsOfferings strengthen access and year-round support for Virginia students.

- Raft Debate 2025: Who will keep their spot on the raft?The beloved W&M tradition returns Oct. 28 starting at 6 p.m. in the Sadler Center’s Commonwealth Auditorium.